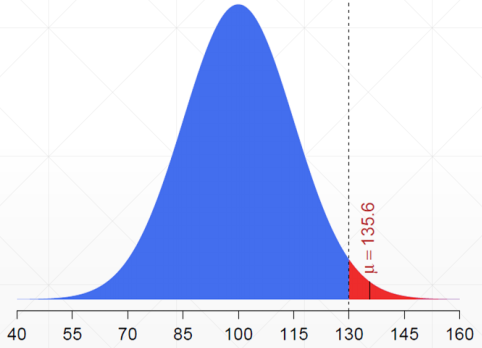

All candidate models in the search space have roughly the same computation as ResNet-50 since we just permute the ordering of feature blocks to obtain candidate models. Taking the ResNet-50 backbone as the seed for the NAS search, we first learn scale-permutation and cross-scale connections.

The architecture search process from a scale-decreased backbone to a scale-permuted backbone.

TENSORFLOW PERMUTE MANUAL

In order to efficiently design the architecture for SpineNet, and avoid a time-intensive manual search of what is optimal, we leverage NAS to determine an optimal architecture. Arrows represent connections among building blocks. Colors and shapes represent different spatial resolutions and feature dimensions. Each rectangle represents a building block.

TENSORFLOW PERMUTE CODE

To facilitate continued work in this space, we have open sourced the SpineNet code to the Tensorflow TPU GitHub repository in Tensorflow 1 and TensorFlow Model Garden GitHub repository in Tensorflow 2.Ī scale-decreased backbone is shown on the left and a scale-permuted backbone is shown on the right. We demonstrate that this model is successful in multi-scale visual recognition tasks, outperforming networks with standard, scale-reduced backbones. We then use neural architecture search (NAS) with a novel search space design that includes these features to discover an effective scale-permuted model. Second, the connections between feature maps should be able to go across feature scales to facilitate multi-scale feature fusion.

First, the spatial resolution of intermediate feature maps should be able to increase or decrease anytime so that the model can retain spatial information as it grows deeper. In our recent CVPR 2020 paper “ SpineNet: Learning Scale-Permuted Backbone for Recognition and Localization”, we propose a meta architecture called a scale-permuted model that enables two major improvements on backbone architecture design. What if one were to design an alternate backbone model that avoids this loss of spatial information, and is thus inherently well-suited for simultaneous image recognition and localization? While this architecture has yielded improved success for image recognition and localization tasks, it still relies on a scale-decreased backbone that throws away spatial information by down-sampling, which the decoder then must attempt to recover. A decoder network is then applied to the backbone to recover the spatial information. Several works including FPN and DeepLabv3+ propose multi-scale encoder-decoder architectures to address this issue, where a scale-decreased network (e.g., a ResNet) is taken as the encoder (commonly referred to as a backbone model). However, this scale-decreased model may not be able to deliver strong features for multi-scale visual recognition tasks where recognition and localization are both important (e.g., object detection and segmentation).

TENSORFLOW PERMUTE SOFTWARE

Posted by Xianzhi Du, Software Engineer and Jaeyoun Kim, Technical Program Manager, Google ResearchĬonvolutional neural networks created for image tasks typically encode an input image into a sequence of intermediate features that capture the semantics of an image (from local to global), where each subsequent layer has a lower spatial dimension.

0 kommentar(er)

0 kommentar(er)